In the peace of one’s own home, a power outage can be a minor inconvenience and perhaps even a welcome opportunity to sit by candlelight and take a break from the usual hustle and bustle. However, when electricity is missing for hours or even days, outages begin to threaten lives and cost billions of dollars. When the power grid goes down, access to medical care, clean water, and fresh food is threatened, and personal safety is ultimately at risk. In addition, disruption to the economy is daunting as closed businesses and interrupted financial markets jeopardize people’s livelihoods.

Some natural disasters, such as hurricanes and solar storms, come with prior warning so that utility companies can prepare. But even with advance notice, the power can still be out for long periods of time while companies scramble to fix downed lines. When Hurricane Sandy soaked New York City on October 29, 2012, about eight million customers across 21 states lost power, and some did not get it restored until Thanksgiving. In 2017, Hurricane Maria pummeled the island of Puerto Rico, and it took nearly a year to restore power. Although the deaths associated with this disaster are attributed to a variety of causes, a portion of them were due to the need for medical respirators, which require electricity.

Society’s critical dependence on electricity makes it a target, and that is an issue of national security

One reason that restoring power can take an extended amount of time is that the nation’s infrastructure is old. Although access to electricity is the lifeblood of modern society, the power grid itself is not modern. As Americans’ demand for, and dependence on, electricity steadily increases, many experts have been calling for an upgrade to make the grid more reliable and resilient. But reducing the time it takes to turn the power back on after a blackout is not the only reason to modernize the grid: incorporating renewable sources of electricity would make energy consumption more sustainable, and adding usage sensors and feedback mechanisms could make its distribution more efficient. Last, but certainly not least, not all potential power outages are due to natural disasters. Society’s critical dependence on electricity makes the grid a target, and that is an issue of national security. Making sure the power grid is secure is an essential part of any upgrade.

Los Alamos National Laboratory is tackling all of these challenges. Lab scientists are using advanced mathematics, computer modeling, machine learning, and cyberphysical network science to determine the best approaches to both protect our national power grid today and prepare it for tomorrow.

From Pearl Street to the Pacific

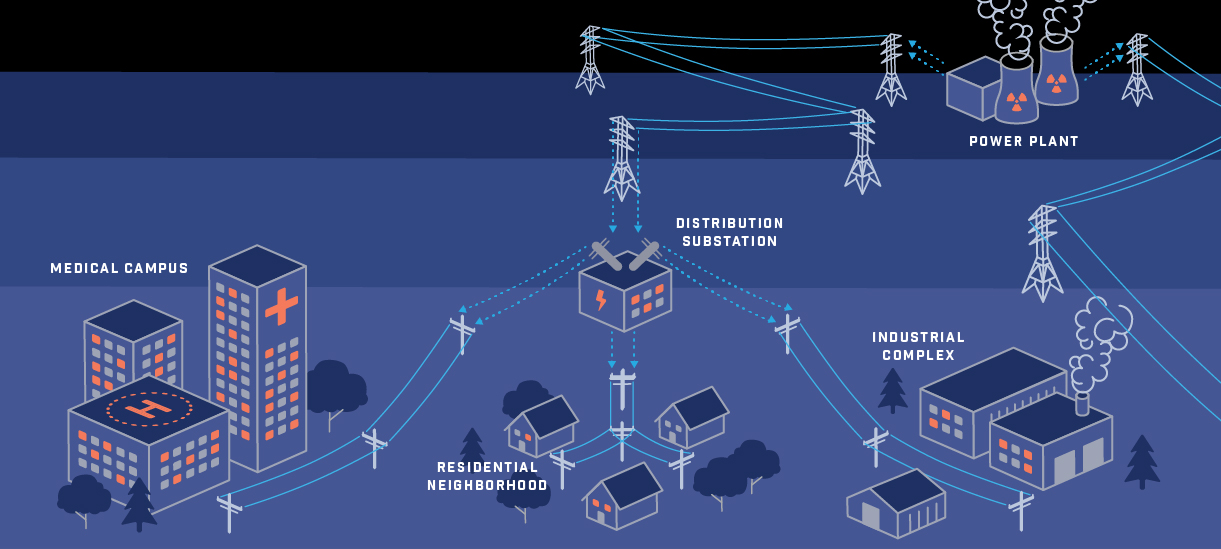

The very first power distribution in the United States began in 1882 on Pearl Street in lower Manhattan when Thomas Edison built a system that used a coal-fired electrical generator to illuminate buildings for about 85 customers. Today, the national power grid is comprised of nearly 200,000 miles of high-tension transmission lines stretching from the Atlantic to the Pacific, operated by hundreds of utility companies. According to the U.S. Energy Information Administration, the grid distributes electricity that has been generated by over 8600 power plants: 63 percent of them use fossil fuels to create electricity, 20 percent are nuclear, and 17 percent use renewable sources, such as wind, solar, and hydropower. While most communities considered the economy of scale and began electrification by building one large power plant, often fed by fossil fuels, and a distribution network, many communities today are powered by combining electricity from many large-scale generators, deriving energy from an aggregate of sources.

Unlike other natural resources, such as water, electricity cannot easily be stored in large quantities, so it must be used quickly after it is generated. What doesn’t get used immediately is wasted, making it vital that utility companies constantly monitor and accurately predict the demand in their areas in order to meet their needs. This is more challenging than it used to be because communities have grown in population and individuals have increased their desire for electricity; American households today have dozens of electronic devices hungry for power. To meet this demand, the heart of each regional utility network contains a control room where highly trained operators work day and night to maintain a form of “stasis” by matching demand and availability—and keeping on the lookout for dramatic changes in either one.

In what has become a rather stressful job in some regions, operators make hour-to-hour decisions about where to purchase electricity and how best to distribute it. Their work is constrained by a lengthy list of factors that include the market economy, political and environmental regulations, hardware limitations, power-generation logistics, and, on occasion, unusual circumstances that could lead to catastrophic outages. For instance, nuclear power plants cannot ramp production up (or down) quickly enough to meet a sudden increase in need; coal plant production occasionally has to be slowed or stopped due to limits on allowable pollution; and wind and solar are only available when the weather permits. Furthermore, extreme weather, such as heat waves or cold snaps, can be an unexpected strain on the system. Computer algorithms help operators make decisions in real time, but overall, day-to-day normal operation is a constantly moving target.

In addition to addressing these constraints, an operator will also have to consider the laws of physics governing the power itself and the fact that one can’t directly control the flow of electricity. Unconstrained, electricity flows according to the path of least resistance and naturally seeks equilibrium. However, not all power lines are created equal; they have varied capacities based on where they are located, when they were constructed, and what the resistivity is of the materials used. Plus, some parts of the grid have not kept up with population growth. If one line becomes overloaded because the demand is too high, the operator will need to address this by adjusting production levels at the power plants, of course without any consumers losing electricity. The capacity of each power line becomes especially important in the event of an outage; once a line is down, the power instantaneously redistributes to other lines, but if they become overloaded, a single outage can have a cascading impact.

Basically, maintaining the power grid is a multifaceted problem on a regular day. However, the complexity increases many-fold when companies consider making changes to the system, such as increasing the use of renewables, upgrading hardware or security features, or preparing for a coming storm. Furthermore, the grid can’t turn off for an upgrade. Harsha Nagarajan, a Los Alamos control theorist who works on grid resiliency, sums it up vividly when he says, “Changing a system in the power grid is like upgrading an airplane while it is flying.”

Ready for anything

For more than a decade, Los Alamos scientists have applied their expertise in advanced mathematics, physics, engineering, and machine learning to the challenges of operating and upgrading the power grid. The projects are numerous; some have been internally funded, while many are funded by the DOE Grid Modernization Laboratory Consortium (GMLC), which began in 2015. Furthermore, over the last few years, Los Alamos scientists have developed a Grid Science Winter School and Conference to educate and promote interdisciplinary solutions to problems in the energy sector.

Although they are varied, the Los Alamos grid science projects essentially address two central themes. First, the scientists seek to obtain a better understanding of how the grid works—where it is strong and where it is vulnerable. Second, they are working to optimize the grid for different purposes—on one hand improving resiliency, efficiency, and the use of renewables, while on the other hand striving for maximum cyber and physical security.

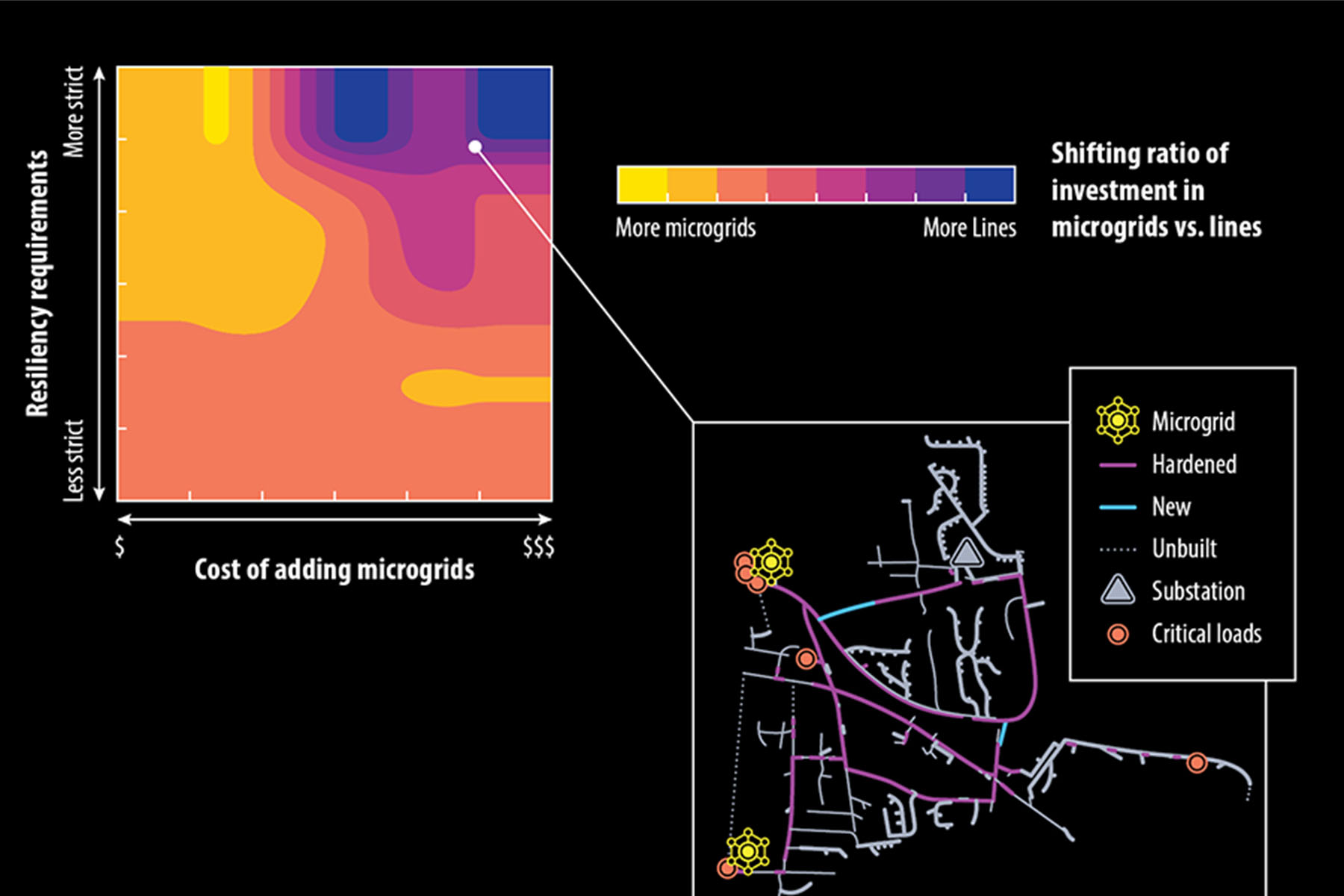

One such project is led by Russell Bent, a computer scientist and mathematician in the Lab’s Theoretical Division who is working on extreme-event modeling to help with understanding risk and performing contingency analysis. His team begins with data about a specific region’s power grid, such as the status of switches and current values of loads and impedances. The team then seeks to quantify the effects in a specific area with regard to which lines are likely to go down (or already have), why, and how they impact the surrounding area’s power supply. These data help to identify which specific lines or substations are the most important to protect.

Identifying high-stakes components in the network is useful for many reasons. For one, it may help focus attention in the right area after a hurricane or other emergency wipes out the power, so that priority can be given to restoring electricity to the largest number of customers. Another reason is to prioritize how periodic upgrades are rolled out—such as reinforcing or hardening utility poles, creating water-blocking barriers around substations, or increasing security measures. It might sound straightforward, but one of the reasons that this mapping is difficult is that the power grid is not uniform. Bent explains that although his team is able to get quality information about load capacities and the physical condition of high-tension, long-distance power lines, when it comes to residential power lines, there is much variation in how and when the grid was built, so information is lacking about everything from load capacities and sensors to the sturdiness of the utility poles.

Changing a system in the power grid is like upgrading an airplane while it is flying

Due to the complexity involved in evaluating this system, Bent and his colleagues use optimization to answer many questions about the data they are analyzing. Optimization algorithms are designed to help make decisions: they seek patterns in the data that achieve a maximum or minimum—the fastest route, the least expensive upgrade, or the most power output. For instance, the algorithms may draw on a combination of many possible upgrade choices to determine a system design that is best able to withstand future extreme events.

Carleton Coffrin, a computer scientist who works with Bent, describes how difficult this is. He explains that in order to make a decision about routing power through the energy grid, humans must consult pure data and statistics as well as models that show relationships between them. If both of these levels of data are available, a human can examine various possible scenarios and usually make a decision. However, sometimes there are an astronomical number of scenarios to consider; this is where computer optimization can help.

“Optimization uses algorithms to do this complex analysis in order to advise a person on the ideal decision,” says Coffrin. “It lets us compute the best-case scenario with a mathematical guarantee.”

Using optimization, the Los Alamos team has developed a software program called the Severe Contingency Solver that has been used by government agencies. This tool includes a graphical user interface to enable use by local analysts who might be preparing for an incoming storm. The Solver can’t prevent the power from going out, but it can be used prior to the storm to quickly identify places that will be the most vulnerable based on current infrastructure and usage, and after the storm it can determine the fastest way to restore electricity to a key location such as a hospital or military base.

Bent and Coffrin work with many colleagues in the Analytics, Intelligence, and Technology Division and the Computer, Computation, and Statistical Sciences Division under Los Alamos’s Advanced Network Science Initiative. Through their combined expertise—which includes not only optimization but also machine learning, control theory, statistical physics, and graphical modeling—the researchers have been able to give science-backed advice to the DOE on many types of perils that threaten the power grid, such as cyberphysical attacks, solar geomagnetic disturbances, and nuclear electromagnetic pulses. The scientists have also advised the state of California on the reliability of its energy system that couples natural gas and electric power. Gas is being used more widely to fuel electric-power generation, but this infrastructure interdependency can also lead to more-complicated outages of not one but two major resources: a gas disruption brings about an electrical disruption as well.

Diversify, diversify

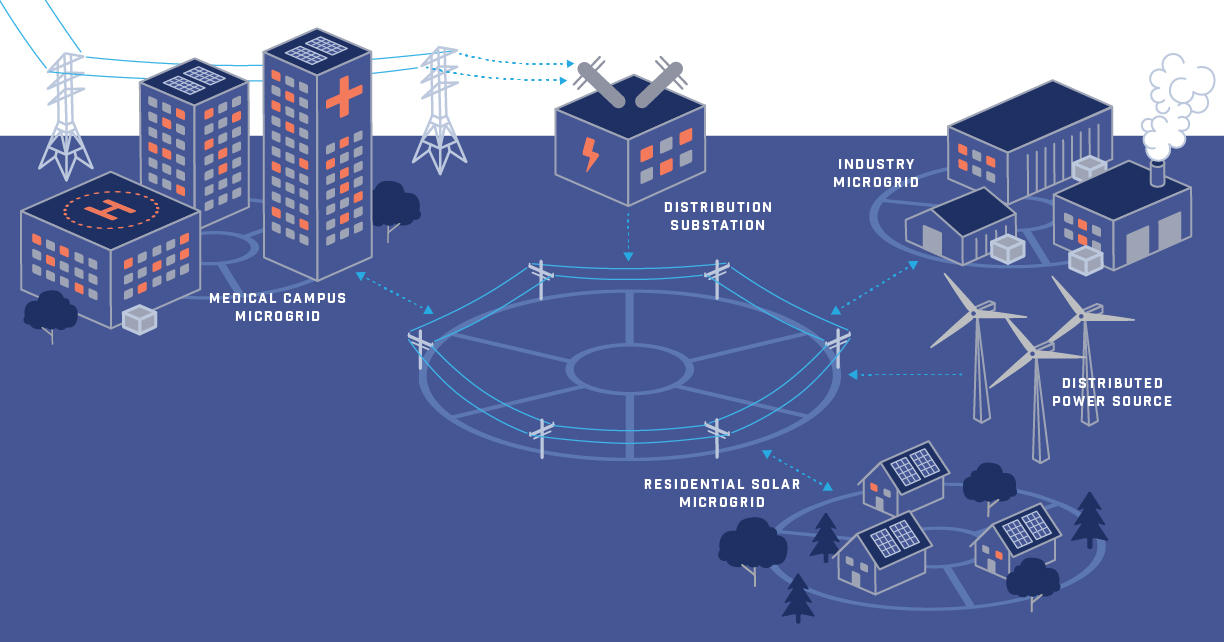

In the aftermath of Hurricane Sandy, there were a few places in the hardest hit communities that remained illuminated because they were not dependent on only one source for electricity. The investment company Goldman Sachs, for instance, had a generator for its Wall Street office building, and Princeton University had a small power station, enabling its entire campus to operate as a “microgrid.” Microgrids are relatively small electricity networks (ranging in size from just one building to a few blocks’ worth) that have their own power sources. They can operate in tandem with the large utility grid—even contributing power to it when possible—or they can disconnect and operate independently as “islands.”

When looking for the optimal way to restore power after a large outage, the presence of microgrids can help by providing power to a subset of users until the main grid gets going again. This concept of integrating diverse generation sources not only increases resilience for a community but also encourages the incorporation of more renewable sources of energy, such as residential solar panels. For these reasons, the GMLC and others in the field envision a modern utility grid as one that incorporates “both centralized and distributed generation and intelligent load control.”

A 2016 attack on the UkraInian powergrid put energy vulnerabilities into the spotlight

Since fossil fuels are a finite resource, and energy use will only continue to rise, a modern grid cannot only address the need for reliability and resilience; it must also address efficiency and sustainability. With these criteria of a modern grid in mind, Los Alamos scientists have applied their suite of expertise to grid design, grid control, and grid resiliency. These complex optimization problems take into account new technologies and needs such as residential solar panels that can provide energy to the grid on sunny days but not at night, smart appliances that can be programmed to run at times when demand on the grid is low, and a proliferation of electric vehicles that require charging for large periods of time.

Key to this modernization is connectivity. Sensors at home and distributed around the grid give feedback about demand and availability—but, as with many things in life, these benefits come with a few risks. The interconnectedness also opens the door to new threats.

Threat of a cyber storm

Clearly, not all power outages are caused by hurricanes. In recent years, Americans have become increasingly aware that cyber attacks threaten many areas of daily life, which could very well include the power grid. Since the vision of a modernized grid includes a good deal of automation and connectivity, it also requires significant attention to cyber security. In fact, a relatively minor power outage in Kiev, Ukraine, in December 2016 has since been identified as the result of an attack using malware that has been referred to as Industroyer or Crash Override. This particular malware is considered to be sophisticated in its ability to use code to disrupt physical systems. Not only that, the malware is adaptable and deletes files to hide its presence.

This relatively new phenomenon of interdependencies between computers and infrastructure has created a whole new area of concern. Cyberphysical security refers to the idea that there are physical systems and devices with computational capability—similar to that of general purpose computers—that need to operate securely. These devices are found everywhere, such as a refrigerator that has a camera for its owner to check its contents while at the store or a coffee machine that can be activated by a household virtual assistant. Safety cameras and controls in modern cars are a good example of why cyberphysical devices need to be kept secure. For instance, it isn’t safe for a driver to have to enter a password before braking, so how does one authenticate the command to brake and ensure it is actually coming from the driver?

Usage sensors, circuit protectors, cameras, and even fire-suppression systems are cyberphysical devices that could be vulnerable in the power grid because of their networked operation. In the past, these devices were designed with functionality, reliability, and safety in mind, but not cyber security. Conversely, the tools and methods developed for defending classical cyber systems don’t always work for cyberphysical systems. When assessing the security of the current and future grid, Los Alamos scientists are trying to identify how to choose or improve upon physical devices and components in order to make a system that is robust against deliberate attacks.

“Security is not a goal that you achieve and then you’re done,” explains Alia Long, a Los Alamos electrical engineer. “It’s an ongoing process determined by your need and the potential threat.” Long insists that although it may be tempting to shy away from modernization for fear of hackers, it is not in the best interests of the grid. She explains that although a more modern, automated grid may introduce new vulnerabilities due to connectedness, the updated grid will ultimately make it easier to detect and respond to problems. For instance, if the grid has to be shut down to fix a cyber problem, then the attacker might have achieved his goal.

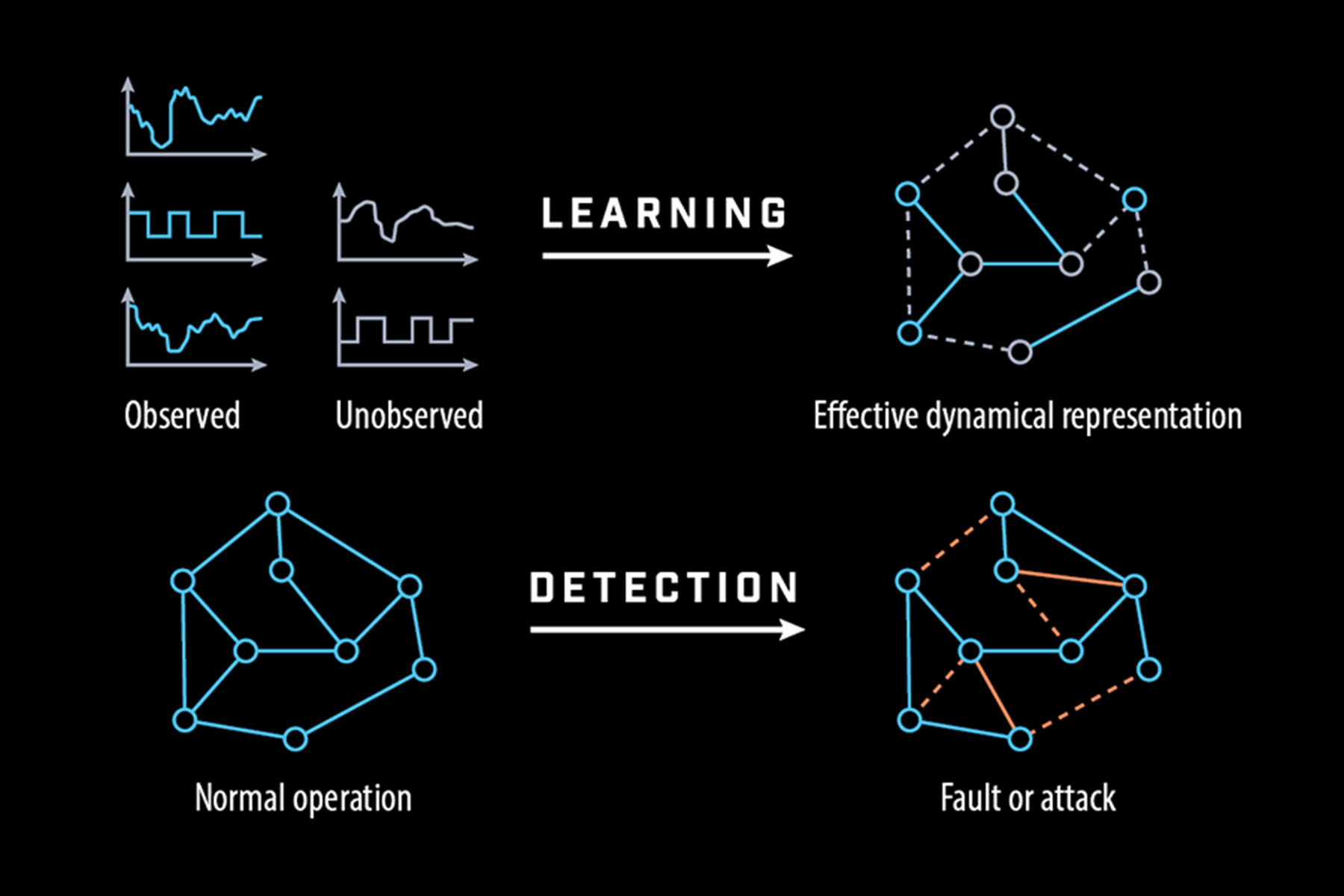

In addition to assessing how to secure cyberphysical devices, Long is also working with Los Alamos theoretician Nathan Lemons on a project to detect suspicious behavior. Lemons is using statistical learning theory to understand the normal activity on the power grid as a way of spotting anomalies. This project is difficult because of the sheer amount of heterogenous information traffic: there are both continuous variables, such as electricity demand, fluctuating up and down and discrete variables, such as an outage from a downed power line. Although a lot of research has been put into models that use these two types of data, there has been much less theoretical work in combining both discrete and continuous data into one model.

“It is especially novel and difficult to try and create a model in a dynamic setting where one learns from the data how the model is expected to evolve over time,” says Lemons. In addition to this, Lemons must include and assess the presence of latent, or hidden, data in the grid. These data could be unknown or unavailable, such as unknown activity on a given line or unavailable information on the status of a switch. By correlating observed data with unobserved data, Lemons is able to infer relationships about dependencies in the network.

Lemons is trying to determine how much data is needed to create a reliable model of the cyberphysical system that can be used for detecting anomalies. For instance, if a network switch is turned off when it should be on, it would be an obvious red flag. However, if the switch is on when it is supposed to be on, can scientists detect if the electrical activity on the other side of the switch is normal or abnormal?

Substation substantiation

Across the Lab, the breadth of work addressing the challenges of maintaining and improving the power grid is formidable. And some of it, in the form of scientific advice to the DOE and industry, has already been applied to improve the national grid. However, with such a complex national system and lengthy list of external participants, there has not been an easy mechanism for real-world, or real-time, feedback about the effectiveness of the science.

Fortunately, validation is about to become much easier. The Los Alamos Lab’s electrical grid is currently being upgraded—including a rebuild of the Supervisory Control and Data Acquisition system. Seizing this unique opportunity, Lab teams are positioned to set up a cyberphysical-systems testbed within the Lab’s new network so that they can experiment, study, and validate everything, from usage optimization algorithms to anomaly or intrusion detection, in a real power grid. The Power Validation Center, as they are calling it, will be up and running in 2020 and, by all accounts, will be a one-of-a-kind capability.

“We hope to use this system to train people for incident response, improve power grid operations, and test updates so they don’t impact operations,” says Long. She explains that the Center will be “ideally suited for addressing the emerging science of cyberphysical systems with access to unique data sets and the ability to perform experiments on critical cyberphysical infrastructure.”

With an eye on the prize of a resilient, sustainable, and secure power grid, Los Alamos scientists are working hard to keep the lights on and the nation secure. Their extraordinary combination of expertise—and their upcoming Power Validation Center—enable them to investigate exciting new approaches to the mundane task of delivering electricity to buildings. With each improved algorithm, Americans can anticipate that when the power goes out, they should relax and enjoy the respite right away because it won’t be long before the grid is going again.

Best Case Scenario

As the complexity of the world increases, seemingly simple tasks, such as sending electricity from a power plant to a neighborhood, become elaborate problems to which there is not a singular right or wrong approach. Complex problem solving is often an exercise in finding the best solution for a specific situation after weighing benefits and risks of many options—and the more options and potentially conflicting objectives there are, the more difficult it is to find the best approach.

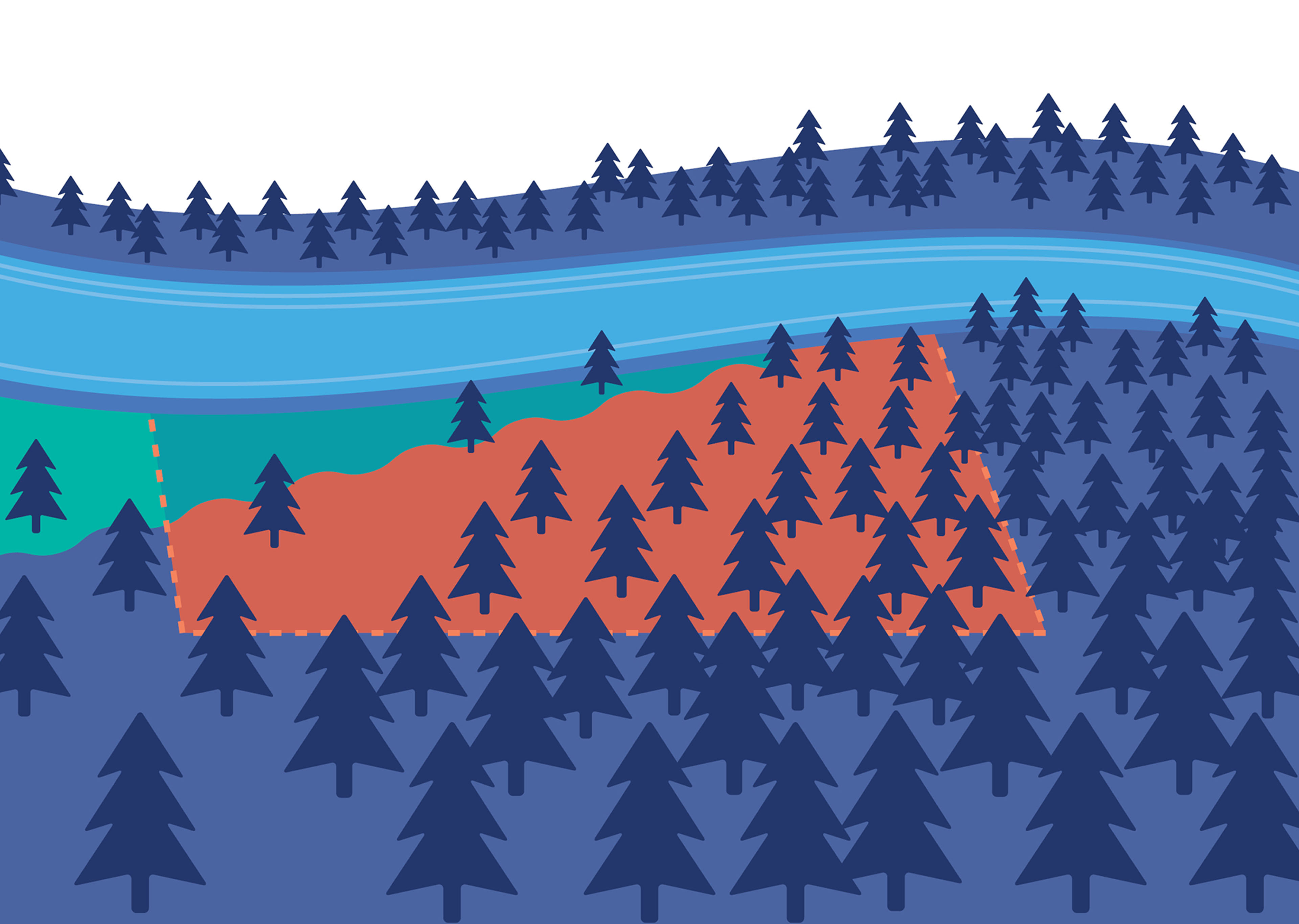

Mathematical optimization is a collection of principles and methods used to find solutions to complex problems. Optimization problems are often defined by seeking a maximum or minimum, such as the lowest cost or the most reliability. For instance, a simple optimization problem could be: “What is the fastest route to drive to work when the main thoroughfare is under construction?” Or, as in the classic optimization problem illustrated below, “What is the maximum area that can be enclosed with a fixed length of fencing, using an existing barrier, such as a river, as one of the four sides?”

But what if the problem added the requirement that the fewest number of trees should be cut down without leaving any inside the fence? Or that the fence should enclose a minimal area of an existing flood plain? When the variables and constraints increase, so does the complexity of the calculation.

Utility companies may be looking for the least expensive way to deliver electricity to their customers on a given day, but the constraints they face include a litany of factors from generator limitations and environmental regulations to the physical constraints of the power lines and the laws of physics. Scientists at Los Alamos are using high-performance computers to run optimization algorithms that can include as many as 2100 possible combinations of variables to answer just one question. This capability allows them to tackle all sorts of grid-optimization challenges, such as incorporating the most renewable energy to a specific area, restoring energy to the most customers after an outage, or adding security features in the most effective places for the least cost.